Newsroom

"Convolutional neural network-based segmentation network applied to image recognition of angiodysplasias lesion under capsule endoscopy", a joint research effort between Ruijin Hospital, Shanghai Jiao Tong University School of Medicine( Ruijin hospital) and JINSHAN was recently published in the World Journal of Gastroenterology. This is the first paper proposing the use of semantic segmentation networks to detect angiodysplasias. The full article can be found at https://www.wjgnet.com/1007-9327/full/v29/i5/879.htm.

AI-assisted diagnosis has been successfully applied to improve the detection rate of small intestinal vascular malformation, reducing the workload of doctors. However, the mainstream network structures of deep learning models are currently classification networks and object detection networks. To address this limitation, researchers at Ruijin Hospital and JINSHAN proposed a novel feature fusion convolutional neural network (CNN) and semantic segmentation model to further evaluate the effectiveness and feasibility of AI-assisted CE reading.

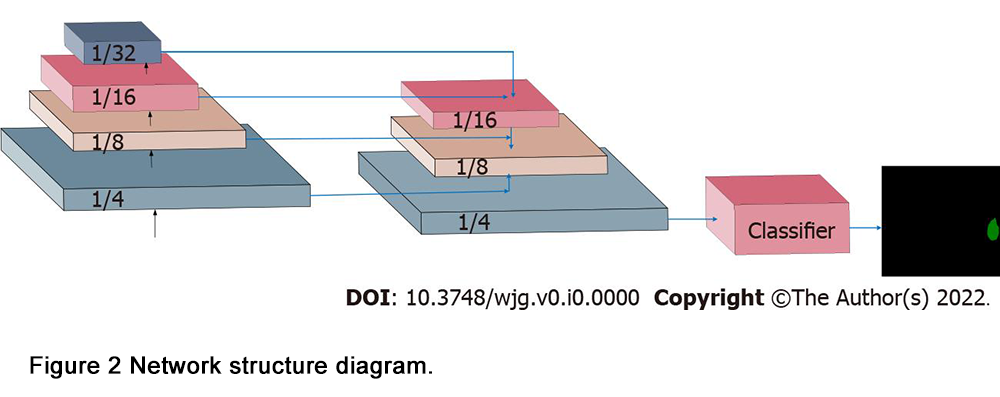

This model employed ResNet-50 as the backbone network and incorporated a fusion mechanism to integrate shallow and deep features for pixel-level classification of CE images, enabling automatic recognition and segmentation of vascular malformations. Additionally, the model could generate lesion contours for better visualization.

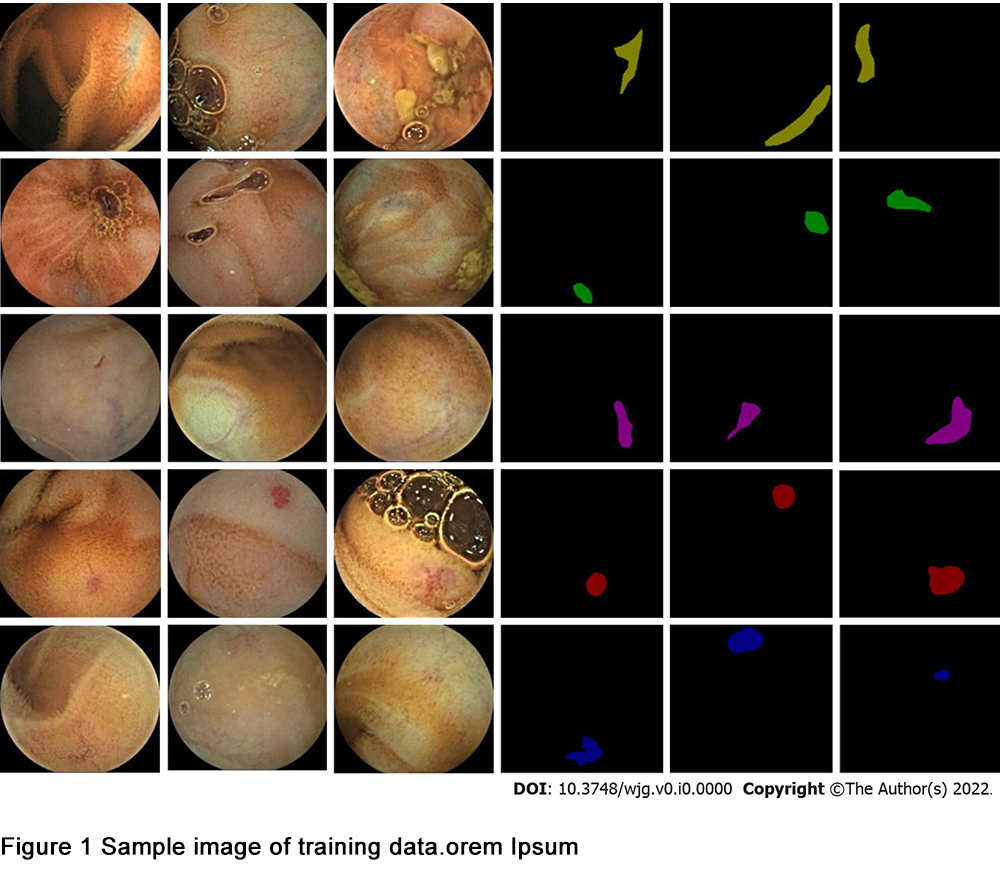

A dataset of 378 patients with vascular malformations who underwent OMOM CE at Ruijin Hospital was collected to train and validate the model. Among them, 178 cases were used as the training set and the remaining 200 cases were used as the test set.

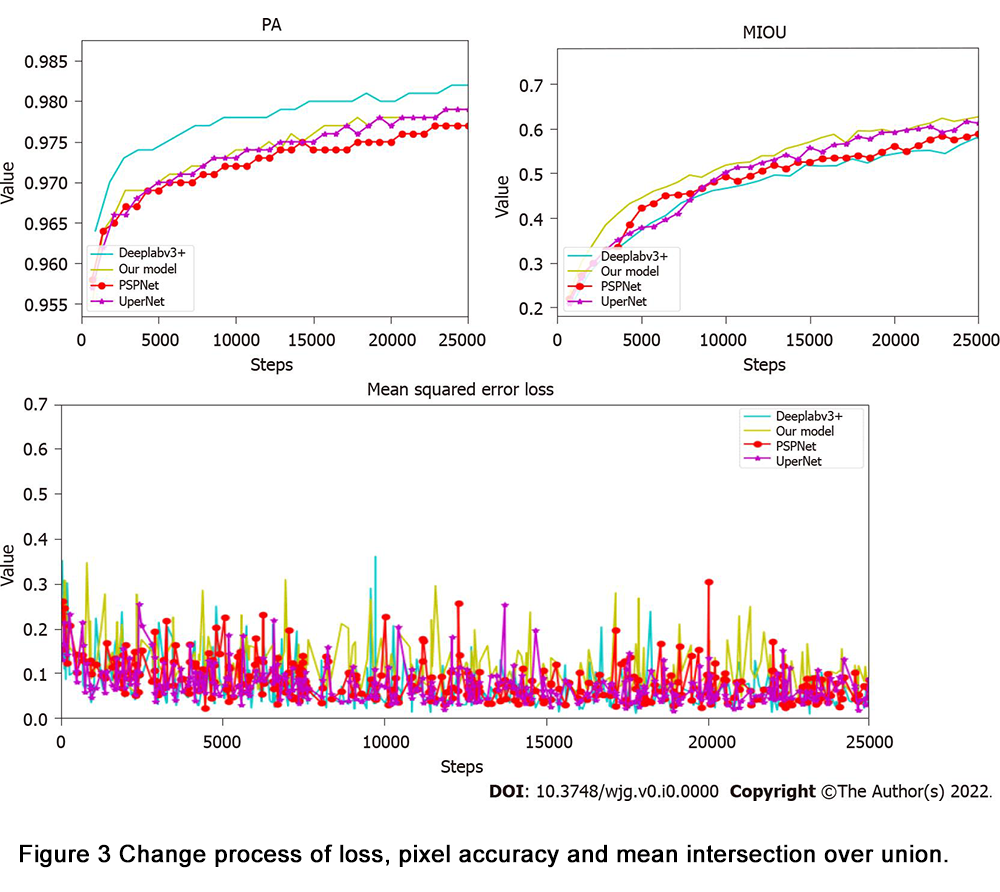

The test set achieved satisfactory results, with a pixel accuracy of 99%, an average intersection over union of 0.69%, a negative predictive value of 98.74%, and a positive predictive value of 94.27%. The model parameters were 46.38 M, floating-point operations were 467.2 G, and the image segmentation and recognition time were 0.6 seconds.

The test set achieved satisfactory results, with a pixel accuracy of 99%, an average intersection over union of 0.69%, a negative predictive value of 98.74%, and a positive predictive value of 94.27%. The model parameters were 46.38 M, floating-point operations were 467.2 G, and the image segmentation and recognition time were 0.6 seconds.

The results suggest that constructing a deep learning-based segmentation network is an effective and feasible method for diagnosing vascular abnormalities. This opens up new directions for future application of AI-assisted CE reading, and this innovation will soon be implemented in OMOM products.